Speed through safety How AI risk management works

Risk management often comes too late. Instead of providing guidance at an early stage, it often only gets involved when decisions have long since been made and implemented and the solution is already outdated. In the AI environment, risk, legal and compliance departments are quickly perceived as obstructionists that slow down innovation and speed.

© FIA

But that doesn't have to be the case. Let's think of a racing car: why does it have particularly good brakes? Not to slow down - but so that it can go faster and take the next bend. This is exactly how risk management can be understood - as a prerequisite for speed and success.

This requires three things:

Include business risks - don't just look at regulatory and operational aspects

Considering the entire life cycle - keeping an eye on risks from the idea to decommissioning

Anchoring as an enabler - risk management as part of decision-making, not just as a control

This is exactly where the NIST AI Risk Management Framework comes in - it translates these principles into a clear structure and makes them practically applicable for companies.

The NIST AI Risk Management Framework

The NIST AI Risk Management Framework (AI RMF) was developed to support organizations in the safe and responsible use of AI. It combines opportunities and risks and thus offers a practical orientation framework for companies that want to successfully shape AI initiatives.

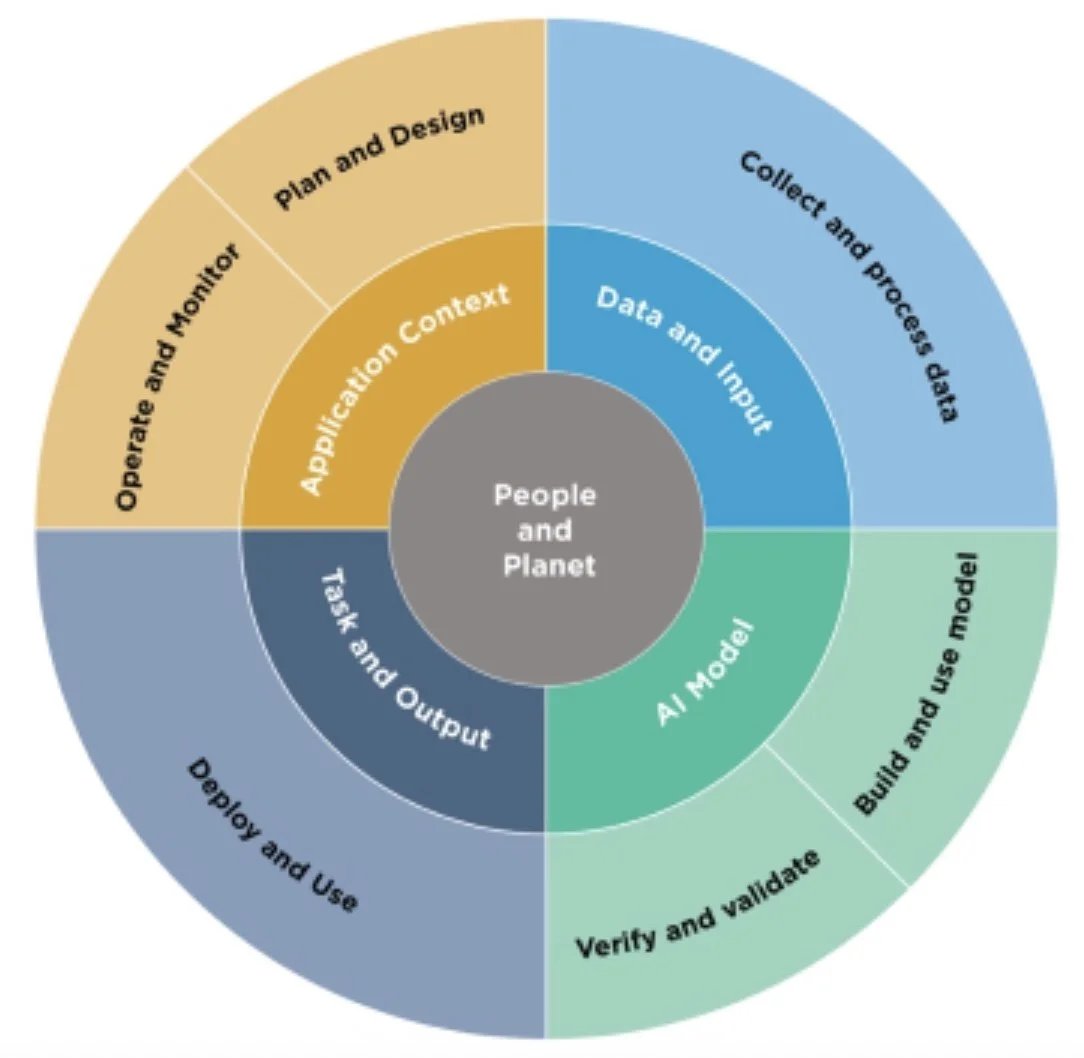

The NIST AI Risk Management Framework considers the entire life cycle of AI systems - from initial planning to decommissioning. The focus is not only on technical issues, but also on the application context, the quality of the data, the validation of the models, the impact of the results and the social, legal and ethical implications(people & planet).

Source: https://www.nist.gov/artificial-intelligence

Two guiding principles make the difference:

Stakeholders: AI affects many stakeholder groups - from developers to management and regulators to end customers. Effective risk management consciously and actively incorporates these different perspectives.

Lifecycle: Risks and opportunities change along the entire lifecycle of AI systems. What is still a technical risk during development can become a reputational or legal risk during operation.

Its core consists of the four functions Govern, Map, Measure, Manage. Together, they form a structured approach to identify risks at an early stage, manage them effectively - and thus create the basis for successful AI initiatives.

AI risks under control

Would you like to find out more - and gain a concrete handle on the risks and opportunities of your AI initiatives? Then we cordially invite you to the workshop "AI risks under control - a practical workshop for decision-makers" at the CNO Panel 2025 in Bern.

In this interactive format, you will first learn about the NIST Framework, which offers a structured approach to taking a holistic view of the opportunities and risks of AI initiatives. It will then be deepened in a playful way with a Risk Poker based on concrete case studies, in which risks are jointly assessed, differences are made visible and clear priorities are developed. Finally, we will use the Risk Bot to demonstrate live how modern AI tools can support risk management and be directly integrated into decision-making processes.

As a participant, you will take away valuable impulses: a practical catalog of relevant AI risks, a set of risk poker cards for your own organization and the experience of how a risk bot can be integrated into governance and decision-making processes.